There's only one place to see the future of technology before it arrives: CES. Every year, the world's tech companies converge in Las Vegas to give everyone a glimpse of all the new tech gizmos and trends to come.

Google's Tango technology has been in the works for a long time, but we didn't love the first phone that made use of this tech. The second Tango phone, ASUS' ZenFone AR, addresses most of our biggest concerns. It's actually pocketable, for one, and packs a high-end Snapdragon chipset, along with a whopping 8GB of RAM. It's more than just an AR machine too: It also supports Google's Daydream VR platform.

Google's Tango technology has been in the works for a long time, but we didn't love the first phone that made use of this tech. The second Tango phone, ASUS' ZenFone AR, addresses most of our biggest concerns. It's actually pocketable, for one, and packs a high-end Snapdragon chipset, along with a whopping 8GB of RAM. It's more than just an AR machine too: It also supports Google's Daydream VR platform.

ZTE's Blade line of smartphones is well-known abroad, but the company is finally bringing it to the US with the Blade V8 Pro. More importantly, it packs plenty of goodies, considering its $230 price. There's a bright 5.5-inch full HD display, for starters, along with a solid body that feels better than other mid-range phones we've seen at the show. It also rocks a surprisingly good dual camera setup. Who says every important phone has to be pricey?

Also Read : Best Of CES 2017 : Smart Devices

The fact that the Casio WSD-F20 is one of the first smartwatches to run Android Wear 2.0 is nice, but it's the device's built-in low-power GPS and offline maps in color that have us paying closer attention. The rugged watch has new button guards and a protective bezel that make it hardier than its predecessor, which is important for its target audience. It may be pricier than a typical Android Wear watch, but the Casio WSD-F20 offers enough differentiating features to justify that premium, at least for outdoor enthusiasts.

The fact that the Casio WSD-F20 is one of the first smartwatches to run Android Wear 2.0 is nice, but it's the device's built-in low-power GPS and offline maps in color that have us paying closer attention. The rugged watch has new button guards and a protective bezel that make it hardier than its predecessor, which is important for its target audience. It may be pricier than a typical Android Wear watch, but the Casio WSD-F20 offers enough differentiating features to justify that premium, at least for outdoor enthusiasts.

There are plenty of activity trackers for golf, tennis and many other sports, but what about boxing? Everlast and PIQ have teamed up to attach a motion sensor to a boxing glove to track your face-punching. That data is then crunched to tell you the type of punch, impact and force that you produce during your sparring sessions. You can then post it to social media -- you know, to warn your Facebook friends not to mess with you on a night out.

Projectors have never been this cool before. Ever. Another wild concept from Razer, Project Ariana is a projector that extends your computer display or TV onto your walls for a more immersive experience. Using two depth sensors, Ariana scans your room and then tells its 155-degree fisheye lens to project an expanded field of view around your main display. The result is an impressive interactive light and projection show that augments whatever is happening on the screen.

Projectors have never been this cool before. Ever. Another wild concept from Razer, Project Ariana is a projector that extends your computer display or TV onto your walls for a more immersive experience. Using two depth sensors, Ariana scans your room and then tells its 155-degree fisheye lens to project an expanded field of view around your main display. The result is an impressive interactive light and projection show that augments whatever is happening on the screen.

NVIDIA GeForce Now is a game streaming service that lets you rent a virtual gaming PC to play your games on the move. It lets you play any game you own on something as weak as a MacBook Air, for a price.

NVIDIA GeForce Now is a game streaming service that lets you rent a virtual gaming PC to play your games on the move. It lets you play any game you own on something as weak as a MacBook Air, for a price.

Source : Engadget

Also Read : Consumer Electronics Show (CES) : History

Mobile Devices

ASUS ZenFone AR

Google's Tango technology has been in the works for a long time, but we didn't love the first phone that made use of this tech. The second Tango phone, ASUS' ZenFone AR, addresses most of our biggest concerns. It's actually pocketable, for one, and packs a high-end Snapdragon chipset, along with a whopping 8GB of RAM. It's more than just an AR machine too: It also supports Google's Daydream VR platform.

Google's Tango technology has been in the works for a long time, but we didn't love the first phone that made use of this tech. The second Tango phone, ASUS' ZenFone AR, addresses most of our biggest concerns. It's actually pocketable, for one, and packs a high-end Snapdragon chipset, along with a whopping 8GB of RAM. It's more than just an AR machine too: It also supports Google's Daydream VR platform.ZTE Blade V8 Pro

ZTE's Blade line of smartphones is well-known abroad, but the company is finally bringing it to the US with the Blade V8 Pro. More importantly, it packs plenty of goodies, considering its $230 price. There's a bright 5.5-inch full HD display, for starters, along with a solid body that feels better than other mid-range phones we've seen at the show. It also rocks a surprisingly good dual camera setup. Who says every important phone has to be pricey?

Also Read : Best Of CES 2017 : Smart Devices

-------------------------

Wearable

Casio Android Wear watch

The fact that the Casio WSD-F20 is one of the first smartwatches to run Android Wear 2.0 is nice, but it's the device's built-in low-power GPS and offline maps in color that have us paying closer attention. The rugged watch has new button guards and a protective bezel that make it hardier than its predecessor, which is important for its target audience. It may be pricier than a typical Android Wear watch, but the Casio WSD-F20 offers enough differentiating features to justify that premium, at least for outdoor enthusiasts.

The fact that the Casio WSD-F20 is one of the first smartwatches to run Android Wear 2.0 is nice, but it's the device's built-in low-power GPS and offline maps in color that have us paying closer attention. The rugged watch has new button guards and a protective bezel that make it hardier than its predecessor, which is important for its target audience. It may be pricier than a typical Android Wear watch, but the Casio WSD-F20 offers enough differentiating features to justify that premium, at least for outdoor enthusiasts.Everlast and PIQ boxing glove

There are plenty of activity trackers for golf, tennis and many other sports, but what about boxing? Everlast and PIQ have teamed up to attach a motion sensor to a boxing glove to track your face-punching. That data is then crunched to tell you the type of punch, impact and force that you produce during your sparring sessions. You can then post it to social media -- you know, to warn your Facebook friends not to mess with you on a night out.

-------------------------

PC Tech

Razer Project Ariana

Projectors have never been this cool before. Ever. Another wild concept from Razer, Project Ariana is a projector that extends your computer display or TV onto your walls for a more immersive experience. Using two depth sensors, Ariana scans your room and then tells its 155-degree fisheye lens to project an expanded field of view around your main display. The result is an impressive interactive light and projection show that augments whatever is happening on the screen.

Projectors have never been this cool before. Ever. Another wild concept from Razer, Project Ariana is a projector that extends your computer display or TV onto your walls for a more immersive experience. Using two depth sensors, Ariana scans your room and then tells its 155-degree fisheye lens to project an expanded field of view around your main display. The result is an impressive interactive light and projection show that augments whatever is happening on the screen.NVIDIA GeForce Now for Mac and PCs

NVIDIA GeForce Now is a game streaming service that lets you rent a virtual gaming PC to play your games on the move. It lets you play any game you own on something as weak as a MacBook Air, for a price.

NVIDIA GeForce Now is a game streaming service that lets you rent a virtual gaming PC to play your games on the move. It lets you play any game you own on something as weak as a MacBook Air, for a price.Source : Engadget

Author of this post :

CES is the tech world's big coming-out party for the New Year — a supersize circus of gadgetry that both follows major trends in tech and creates them. It’s never the same year to year, but it’s always a showcase of what’s to come in consumer technology.

Called Helia, the new line of bulbs from Soraa are LED lights that aim to recreate the feel of natural sunlight. The bulbs, which rely on sensors rather than Wi-Fi, emit bright blue light in the morning when you're waking up and then slowly reduce the amount of blue light until the sun sets and all blue light is eliminated. It's kind of light iOS's Night Shift mode and the result is light that's easier on your tired eyes (and brain) and less disruptive to your sleep.

Called Helia, the new line of bulbs from Soraa are LED lights that aim to recreate the feel of natural sunlight. The bulbs, which rely on sensors rather than Wi-Fi, emit bright blue light in the morning when you're waking up and then slowly reduce the amount of blue light until the sun sets and all blue light is eliminated. It's kind of light iOS's Night Shift mode and the result is light that's easier on your tired eyes (and brain) and less disruptive to your sleep.

The AIRE by FoodMarble is a portable app-connected gadget that is able to analyze the amount of gas in your bloodstream the way a breathalyzer would monitor your blood alcohol level. According to FoodMarble, certain foods that don't gel with your system cause a buildup of gas in your gut. Using a series of breath tests and food tracking, the AIRE can help you find out which foods don't agree with your body so that you can live a more comfortable life.

The Bodyfriend Aventar looks more like a luxury sports car than something that will give you an impressive massage. When you sit in the chair it scans your body and creates a special massage just for you by measuring your shoulder height and width, leg length, weight and height. Using its connected app, you can set preferences for individual users, and set specific massages for certain areas of your body, so if you just want to get a pain out of your feet and don't want the full experience, you can select just a foot massage. Best of all, the chair’s got a 5.1 speaker system that connects wirelessly to your TV.

The Bodyfriend Aventar looks more like a luxury sports car than something that will give you an impressive massage. When you sit in the chair it scans your body and creates a special massage just for you by measuring your shoulder height and width, leg length, weight and height. Using its connected app, you can set preferences for individual users, and set specific massages for certain areas of your body, so if you just want to get a pain out of your feet and don't want the full experience, you can select just a foot massage. Best of all, the chair’s got a 5.1 speaker system that connects wirelessly to your TV.

Also Read : Best Of CES 2016 : Smart Devices

Willow's hands-free breast pump could be a game-changer for new mothers tired of noisy and cumbersome electric breast pumps. The set of two wearable pumps slip into the wearer's bra and simply pump until its bags are full. The product is remarkably quiet, so it can be worn out of the house while women go about their daily activities. Willow is also FDA-approved and simple to clean, with parts that are dishwasher safe. Of course, an intuitive, hands-free pump doesn't come cheap -- when it launches this spring, it will retail for $429.99, plus $0.50 per milk storage bag.

Willow's hands-free breast pump could be a game-changer for new mothers tired of noisy and cumbersome electric breast pumps. The set of two wearable pumps slip into the wearer's bra and simply pump until its bags are full. The product is remarkably quiet, so it can be worn out of the house while women go about their daily activities. Willow is also FDA-approved and simple to clean, with parts that are dishwasher safe. Of course, an intuitive, hands-free pump doesn't come cheap -- when it launches this spring, it will retail for $429.99, plus $0.50 per milk storage bag.

PowerRay is an underwater drone for fishing that's able to dive to around 100 feet. Equipped with sonar, PowerRay can detect fish at distances of up to 120 feet beneath it and lure them in with a special fish-attracting light. Should the day's potential catch still prove to be elusive, PowerRay has a remote-controlled bait-drop system that should do the trick. For the pilots up on deck, there's WiFi connectivity and a real-time video feed from the onboard 4K camera of what's going on down below. For the real high-tech angler, that video feed can also be viewed in, and controlled by, VR goggles.

PowerRay is an underwater drone for fishing that's able to dive to around 100 feet. Equipped with sonar, PowerRay can detect fish at distances of up to 120 feet beneath it and lure them in with a special fish-attracting light. Should the day's potential catch still prove to be elusive, PowerRay has a remote-controlled bait-drop system that should do the trick. For the pilots up on deck, there's WiFi connectivity and a real-time video feed from the onboard 4K camera of what's going on down below. For the real high-tech angler, that video feed can also be viewed in, and controlled by, VR goggles.

Lego creations are about to get way more fun. Priced at $160 per set, the new Lego Boost teaches your kids how to bring their building blocks to life with code. Thanks to a mix of sensors and motors, the Lego creation can be programmed with an app to follow different commands, like moving or rolling around depending on what you're building. There are five different models, including a cat and a guitar. The Boost will be available in August.

Lego creations are about to get way more fun. Priced at $160 per set, the new Lego Boost teaches your kids how to bring their building blocks to life with code. Thanks to a mix of sensors and motors, the Lego creation can be programmed with an app to follow different commands, like moving or rolling around depending on what you're building. There are five different models, including a cat and a guitar. The Boost will be available in August.

Also Read : Best Of CES 2017 : Mobile, Wearable & PC

Source1 : Mashable Source2 : Engadget

Smart Devices

Helia Smart Light Bulbs

Called Helia, the new line of bulbs from Soraa are LED lights that aim to recreate the feel of natural sunlight. The bulbs, which rely on sensors rather than Wi-Fi, emit bright blue light in the morning when you're waking up and then slowly reduce the amount of blue light until the sun sets and all blue light is eliminated. It's kind of light iOS's Night Shift mode and the result is light that's easier on your tired eyes (and brain) and less disruptive to your sleep.

Called Helia, the new line of bulbs from Soraa are LED lights that aim to recreate the feel of natural sunlight. The bulbs, which rely on sensors rather than Wi-Fi, emit bright blue light in the morning when you're waking up and then slowly reduce the amount of blue light until the sun sets and all blue light is eliminated. It's kind of light iOS's Night Shift mode and the result is light that's easier on your tired eyes (and brain) and less disruptive to your sleep.AIRE Digestive Tracker

The AIRE by FoodMarble is a portable app-connected gadget that is able to analyze the amount of gas in your bloodstream the way a breathalyzer would monitor your blood alcohol level. According to FoodMarble, certain foods that don't gel with your system cause a buildup of gas in your gut. Using a series of breath tests and food tracking, the AIRE can help you find out which foods don't agree with your body so that you can live a more comfortable life.

Bodyfriend Aventar Massage Chair

The Bodyfriend Aventar looks more like a luxury sports car than something that will give you an impressive massage. When you sit in the chair it scans your body and creates a special massage just for you by measuring your shoulder height and width, leg length, weight and height. Using its connected app, you can set preferences for individual users, and set specific massages for certain areas of your body, so if you just want to get a pain out of your feet and don't want the full experience, you can select just a foot massage. Best of all, the chair’s got a 5.1 speaker system that connects wirelessly to your TV.

The Bodyfriend Aventar looks more like a luxury sports car than something that will give you an impressive massage. When you sit in the chair it scans your body and creates a special massage just for you by measuring your shoulder height and width, leg length, weight and height. Using its connected app, you can set preferences for individual users, and set specific massages for certain areas of your body, so if you just want to get a pain out of your feet and don't want the full experience, you can select just a foot massage. Best of all, the chair’s got a 5.1 speaker system that connects wirelessly to your TV.Also Read : Best Of CES 2016 : Smart Devices

Willow Hands-free Breast Pump

Willow's hands-free breast pump could be a game-changer for new mothers tired of noisy and cumbersome electric breast pumps. The set of two wearable pumps slip into the wearer's bra and simply pump until its bags are full. The product is remarkably quiet, so it can be worn out of the house while women go about their daily activities. Willow is also FDA-approved and simple to clean, with parts that are dishwasher safe. Of course, an intuitive, hands-free pump doesn't come cheap -- when it launches this spring, it will retail for $429.99, plus $0.50 per milk storage bag.

Willow's hands-free breast pump could be a game-changer for new mothers tired of noisy and cumbersome electric breast pumps. The set of two wearable pumps slip into the wearer's bra and simply pump until its bags are full. The product is remarkably quiet, so it can be worn out of the house while women go about their daily activities. Willow is also FDA-approved and simple to clean, with parts that are dishwasher safe. Of course, an intuitive, hands-free pump doesn't come cheap -- when it launches this spring, it will retail for $429.99, plus $0.50 per milk storage bag.PowerRay underwater fishing robot

PowerRay is an underwater drone for fishing that's able to dive to around 100 feet. Equipped with sonar, PowerRay can detect fish at distances of up to 120 feet beneath it and lure them in with a special fish-attracting light. Should the day's potential catch still prove to be elusive, PowerRay has a remote-controlled bait-drop system that should do the trick. For the pilots up on deck, there's WiFi connectivity and a real-time video feed from the onboard 4K camera of what's going on down below. For the real high-tech angler, that video feed can also be viewed in, and controlled by, VR goggles.

PowerRay is an underwater drone for fishing that's able to dive to around 100 feet. Equipped with sonar, PowerRay can detect fish at distances of up to 120 feet beneath it and lure them in with a special fish-attracting light. Should the day's potential catch still prove to be elusive, PowerRay has a remote-controlled bait-drop system that should do the trick. For the pilots up on deck, there's WiFi connectivity and a real-time video feed from the onboard 4K camera of what's going on down below. For the real high-tech angler, that video feed can also be viewed in, and controlled by, VR goggles.Lego Boost

Lego creations are about to get way more fun. Priced at $160 per set, the new Lego Boost teaches your kids how to bring their building blocks to life with code. Thanks to a mix of sensors and motors, the Lego creation can be programmed with an app to follow different commands, like moving or rolling around depending on what you're building. There are five different models, including a cat and a guitar. The Boost will be available in August.

Lego creations are about to get way more fun. Priced at $160 per set, the new Lego Boost teaches your kids how to bring their building blocks to life with code. Thanks to a mix of sensors and motors, the Lego creation can be programmed with an app to follow different commands, like moving or rolling around depending on what you're building. There are five different models, including a cat and a guitar. The Boost will be available in August.Also Read : Best Of CES 2017 : Mobile, Wearable & PC

Source1 : Mashable Source2 : Engadget

Author of this post :

In today’s world where cloud storage is taking place of our small storing devices like pen drive, it may become a tedious job to upload tons of files to the cloud services like google drive or one drive. So how to make these tasks easy and convenient? We can use the send to shortcut menu in windows, which is accessible by right click.

In today’s world where cloud storage is taking place of our small storing devices like pen drive, it may become a tedious job to upload tons of files to the cloud services like google drive or one drive. So how to make these tasks easy and convenient? We can use the send to shortcut menu in windows, which is accessible by right click.Requirements.

- 1. A windows PC.

- 2. One drive or Google drive already installed on PC if we need assistance installing Google drive or One drive, open link in new tab

Adding One Drive to SEND TO menu.

For this demo, we are using One Drive, but you can also do this with Google Drive as well. Certainly, this is one of the most effective ways to use right-click send to the menu on your Windows PC.Let's get started!

1. Now, open run from the start menu or by simply pressing Windows Key + R.

2. Write "Shell:sendto" in run command and hit enter. This will open a Send to a folder, where we will be creating a shortcut for one drive.

We can also navigate to C:\Users\USERNAME\AppData\Roaming\Microsoft\Windows\SendTo, where USERNAME is your computer's name.

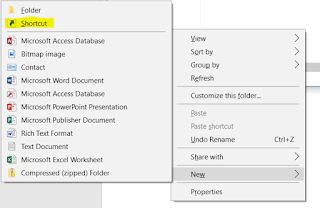

3. Right-click in the white space in the Send To Folder, and click on New and select Shortcut.

4. In the location field, browse the location of One Drive/ Google Drive.

5. Name the shortcut that you want to see in SEND TO menu.

6. Right-click on the file you want to send, and it will be uploaded to One Drive/Google Drive depending upon over selection.

We can also use this trick of editing "send to" menu for many other purposes like sending a folder to specific drive (C:, E:, etc), to a specific folder, and delete some unnecessary items like fax recipient.

Author of this post :

Michigan State University engineering researchers have created a new way to harvest energy from human motion, using a film-like device that actually can be folded to create more power. With the low-cost device, known as a nano-generator, the scientists successfully operated an LCD touch screen, a bank of 20 LED lights and a flexible keyboard, all with a simple touching or pressing motion and without the aid of a battery.

The groundbreaking findings, published in the journal Nano Energy, suggest "we're on the path toward wearable devices powered by human motion," said Nelson Sepulveda, associate professor of electrical and computer engineering and lead investigator of the project.

Advantages such as being lightweight, flexible, bio-compatible, scalable, low-cost and robust could make FENG "a promising and alternative method in the field of mechanical-energy harvesting" for many autonomous electronics such as wireless headsets, cell phones and other touch-screen devices, the study says. Remarkably, the device also becomes more powerful when folded.

The groundbreaking findings, published in the journal Nano Energy, suggest "we're on the path toward wearable devices powered by human motion," said Nelson Sepulveda, associate professor of electrical and computer engineering and lead investigator of the project.

"What I foresee, relatively soon, is the capability of not having to charge your cell phone for an entire week, for example, because that energy will be produced by your movement," said Sepulveda, whose research is funded by the National Science Foundation.The innovative process starts with a silicone wafer, which is then fabricated with several layers, or thin sheets, of environmentally friendly substances including silver, polyimide and polypropylene ferro electret. Ions are added so that each layer in the device contains charged particles. Electrical energy is created when the device is compressed by human motion, or mechanical energy. The completed device is called a bio-compatible ferro electret nano-generator, or FENG. The device is as thin as a sheet of paper and can be adapted to many applications and sizes. The device used to power the LED lights was palm-sized, for example, while the device used to power the touch screen was as small as a finger.

Advantages such as being lightweight, flexible, bio-compatible, scalable, low-cost and robust could make FENG "a promising and alternative method in the field of mechanical-energy harvesting" for many autonomous electronics such as wireless headsets, cell phones and other touch-screen devices, the study says. Remarkably, the device also becomes more powerful when folded.

"Each time you fold it you are increasing exponentially the amount of voltage you are creating," Sepulveda said. "You can start with a large device, but when you fold it once, and again, and again, it's now much smaller and has more energy. Now it may be small enough to put in a specially made heel of your shoe so it creates power each time your heel strikes the ground."Sepulveda and his team are developing technology that would transmit the power generated from the heel strike to, say, a wireless headset.

Author of this post :

“ Creativity knows no limits ”

This one phrase tells us all about the new and exciting blend of mobility and robust configurability HP ZBook 15 Mobile Workstation…….

It’s the perfect mix of style, features, and portability. No one really needs an introduction to NASA (National Aeronautics and Space Administration) or what it does? But there is one project involved that is running the ISS (International Space Station), which sustains the human crews conducting crucial experiments beyond the Earth’s atmosphere.

What is ISS?

ISS is a joint project with five space agencies, including NASA, Roskosmos of Russia, Japan Aerospace Exploration Agency, Canadian Space Agency, and the European Space Agency. The computers on the ISS are upgraded every six years, and this time they have selected HP Zbooks.

You ask why??

Well, then-The Z Workstations are literally everything that the ISS mission depends on- tied-in to ISS life-support, vehicle control, maintenance, and operations systems. They provide mission support for onboard experiments, email, entertainment, and more. In short-everything the astronauts on the ISS need to survive and communicate and research with.

Why they choose HP Zbooks?

NASA chose the HP ZBook 15 Mobile Workstations because of its performance and reliability that HP builds into their mobile workstation solutions. With advanced capabilities that are typically not available in notebooks, like 3D graphics, powerful processors, and massive memory, crew members can be a lot more efficient—even the boot-up timings are scrutinized when an astronaut’s time is worth more than $100,000 an hour.

DESCRIPTION:

Conquer the professional space with the perfect combination of brains and beauty. The iconic 15.6" diagonal HP ZBook Studio is HP’s thinnest, lightest, and most attractive full performance mobile workstation. With dual 1 TB HP Z Turbo Drive G2 of total storage, 32 GB ECC memory, and optional HP Dream Color UHD or FHD touch displays, this is a killer from HP’s stable.

Work confidently with HP mobile workstations designed around 30 years of HP Z DNA with extensive ISV certification. The all new ZBook Studio G3 is reliable, designed to pass MIL-STD 810G testing, and has endured 120K hours of testing in HP's Total Test Process.

For the techies, reduce boot up, calculation, and response time because the trend setting HP ZBook handles large files with optional dual 1 TB HP Z Turbo Drive G storage for a remarkably fast and innovative solution. Quickly and easily transfer data and connect to devices. This mobile workstation is packed with multiple ports including dual Thunderbolt 3 ports, HDMI, and more.

“Thus we can say a HP ZBook makes your statement bold and performance better.”

Author of this post :

University of Washington researchers have taken a first step in showing how humans can interact with virtual realities via direct brain stimulation. In a paper published online Nov. 16 in Frontiers in Robotics and AI, they describe the first demonstration of humans playing a simple, two-dimensional computer game using only input from direct brain stimulation -- without relying on any usual sensory cues from sight, hearing or touch.

The subjects had to navigate 21 different mazes, with two choices to move forward or down based on whether they sensed a visual stimulation artifact called a phosphine, which are perceived as blobs or bars of light. To signal which direction to move, the researchers generated a phosphine through transcranial magnetic stimulation, a well-known technique that uses a magnetic coil placed near the skull to directly and noninvasively stimulate a specific area of the brain.

The simple game demonstrates one way that novel information from artificial sensors or computer-generated virtual worlds can be successfully encoded and delivered noninvasively to the human brain to solve useful tasks. It employs a technology commonly used in neuroscience to study how the brain works -- transcranial magnetic stimulation -- to instead convey actionable information to the brain. The test subjects also got better at the navigation task over time, suggesting that they were able to learn to better detect the artificial stimuli.

Theoretically, any of a variety of sensors on a person's body -- from cameras to infrared, ultrasound, or laser rangefinders -- could convey information about what is surrounding or approaching the person in the real world to a direct brain stimulator that gives that person useful input to guide their actions.

The subjects had to navigate 21 different mazes, with two choices to move forward or down based on whether they sensed a visual stimulation artifact called a phosphine, which are perceived as blobs or bars of light. To signal which direction to move, the researchers generated a phosphine through transcranial magnetic stimulation, a well-known technique that uses a magnetic coil placed near the skull to directly and noninvasively stimulate a specific area of the brain.

"The way virtual reality is done these days is through displays, headsets and goggles, but ultimately your brain is what creates your reality," said senior author Rajesh Rao, UW professor of Computer Science & Engineering and director of the Center for Sensorimotor Neural Engineering.

"The fundamental question we wanted to answer was: Can the brain make use of artificial information that it's never seen before that is delivered directly to the brain to navigate a virtual world or do useful tasks without other sensory input? And the answer is yes."The five test subjects made the right moves in the mazes 92 percent of the time when they received the input via direct brain stimulation, compared to 15 percent of the time when they lacked that guidance.

The simple game demonstrates one way that novel information from artificial sensors or computer-generated virtual worlds can be successfully encoded and delivered noninvasively to the human brain to solve useful tasks. It employs a technology commonly used in neuroscience to study how the brain works -- transcranial magnetic stimulation -- to instead convey actionable information to the brain. The test subjects also got better at the navigation task over time, suggesting that they were able to learn to better detect the artificial stimuli.

"We're essentially trying to give humans a sixth sense," said lead author Darby Losey, a 2016 UW graduate in computer science and neurobiology who now works as a staff researcher for the Institute for Learning & Brain Sciences (I-LABS). "So much effort in this field of neural engineering has focused on decoding information from the brain. We're interested in how you can encode information into the brain."The initial experiment used binary information -- whether a phosphine was present or not -- to let the game players know whether there was an obstacle in front of them in the maze. In the real world, even that type of simple input could help blind or visually impaired individuals navigate.

Theoretically, any of a variety of sensors on a person's body -- from cameras to infrared, ultrasound, or laser rangefinders -- could convey information about what is surrounding or approaching the person in the real world to a direct brain stimulator that gives that person useful input to guide their actions.

"The technology is not there yet -- the tool we use to stimulate the brain is a bulky piece of equipment that you wouldn't carry around with you," said co-author Andrea Stocco, a UW assistant professor of psychology and I-LABS research scientist. "But eventually we might be able to replace the hardware with something that's amenable to real world applications."

Author of this post :

The Bluetooth Special Interest Group (SIG) officially adopted Bluetooth 5 as the latest version of the Bluetooth core specification this week.

Key updates to Bluetooth 5 include longer range, faster speed, and larger broadcast message capacity, as well as improved interoperability and coexistence with other wireless technologies. Bluetooth 5 continues to advance the Internet of Things (IoT) experience by enabling simple and effortless interactions across the vast range of connected devices.

Bluetooth 5 also includes updates that help reduce potential interference with other wireless technologies to ensure Bluetooth devices can coexist within the increasingly complex global IoT environment. Bluetooth 5 delivers all of this while maintaining its low-energy functionality and flexibility for developers to meet the needs of their device or application.

Source: Bluetooth.com

Key updates to Bluetooth 5 include longer range, faster speed, and larger broadcast message capacity, as well as improved interoperability and coexistence with other wireless technologies. Bluetooth 5 continues to advance the Internet of Things (IoT) experience by enabling simple and effortless interactions across the vast range of connected devices.

"Bluetooth is revolutionizing how people experience the IoT. Bluetooth 5 continues to drive this revolution by delivering reliable IoT connections and mobilizing the adoption of beacons, which in turn will decrease connection barriers and enable a seamless IoT experience." Mark Powell, Executive Director of the Bluetooth SIGKey feature updates include four times range, two times speed, and eight times broadcast message capacity. Longer range powers whole home and building coverage, for more robust and reliable connections. Higher speed enables more responsive, high-performance devices. Increased broadcast message size increases the data sent for improved and more context relevant solutions.

Bluetooth 5 also includes updates that help reduce potential interference with other wireless technologies to ensure Bluetooth devices can coexist within the increasingly complex global IoT environment. Bluetooth 5 delivers all of this while maintaining its low-energy functionality and flexibility for developers to meet the needs of their device or application.

Consumers can expect to see products built with Bluetooth 5 within two to six months of today’s release.

Source: Bluetooth.com

Author of this post :

Speech recognition systems, such as those that convert speech to text on cellphones, are generally the result of machine learning. A computer pores through thousands or even millions of audio files and their transcriptions, and learns which acoustic features correspond to which typed words. But transcribing recordings is costly, time-consuming work, which has limited speech recognition to a small subset of languages spoken in wealthy nations.

At the Neural Information Processing Systems conference this week, researchers from MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) are presenting a new approach to training speech-recognition systems that doesn't depend on transcription. Instead, their system analyzes correspondences between images and spoken descriptions of those images, as captured in a large collection of audio recordings. The system then learns which acoustic features of the recordings correlate with which image characteristics.

Conversely, text terms associated with similar clusters of images, such as, say, "storm" and "clouds," could be inferred to have related meanings. Because the system in some sense learns words' meanings -- the images associated with them -- and not just their sounds, it has a wider range of potential applications than a standard speech recognition system. To test their system, the researchers used a database of 1,000 images, each of which had a recording of a free-form verbal description associated with it. They would feed their system one of the recordings and ask it to retrieve the 10 images that best matched it. That set of 10 images would contain the correct one 31 percent of the time.

The final node in the network takes the dot product of the two vectors. That is, it multiplies the corresponding terms in the vectors together and adds them all up to produce a single number. During training, the networks had to try to maximize the dot product when the audio signal corresponded to an image and minimize it when it didn't. For every spectrogram that the researchers' system analyzes, it can identify the points at which the dot-product peaks. In experiments, those peaks reliably picked out words that provided accurate image labels -- "baseball," for instance, in a photo of a baseball pitcher in action, or "grassy" and "field" for an image of a grassy field.

In ongoing work, the researchers have refined the system so that it can pick out spectrograms of individual words and identify just those regions of an image that correspond to them.

Source: Materials provided by Massachusetts Institute of Technology. Original written by Larry Hardesty.

At the Neural Information Processing Systems conference this week, researchers from MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) are presenting a new approach to training speech-recognition systems that doesn't depend on transcription. Instead, their system analyzes correspondences between images and spoken descriptions of those images, as captured in a large collection of audio recordings. The system then learns which acoustic features of the recordings correlate with which image characteristics.

"The goal of this work is to try to get the machine to learn language more like the way humans do," says Jim Glass, a senior research scientist at CSAIL and a co-author on the paper describing the new system.The current methods that people use to train up speech recognizers are very supervised. You get an utterance, and you're told what's said. And you do this for a large body of data. "Big advances have been made -- Siri, Google -- but it's expensive to get those annotations, and people have thus focused on, really, the major languages of the world. There are 7,000 languages, and I think less than 2 percent have ASR [automatic speech recognition] capability, and probably nothing is going to be done to address the others. So if you're trying to think about how technology can be beneficial for society at large, it's interesting to think about what we need to do to change the current situation. And the approach we've been taking through the years is looking at what we can learn with less supervision." Joining Glass on the paper are first author David Harwath, a graduate student in electrical engineering and computer science (EECS) at MIT; and Antonio Torralba, an EECS professor.

Conversely, text terms associated with similar clusters of images, such as, say, "storm" and "clouds," could be inferred to have related meanings. Because the system in some sense learns words' meanings -- the images associated with them -- and not just their sounds, it has a wider range of potential applications than a standard speech recognition system. To test their system, the researchers used a database of 1,000 images, each of which had a recording of a free-form verbal description associated with it. They would feed their system one of the recordings and ask it to retrieve the 10 images that best matched it. That set of 10 images would contain the correct one 31 percent of the time.

"I always emphasize that we're just taking baby steps here and have a long way to go," Glass says. "But it's an encouraging start."The researchers trained their system on images from a huge database built by Torralba; Aude Oliva, a principal research scientist at CSAIL; and their students. Through Amazon's Mechanical Turk crowdsourcing site, they hired people to describe the images verbally, using whatever phrasing came to mind, for about 10 to 20 seconds. For an initial demonstration of the researchers' approach, that kind of tailored data was necessary to ensure good results. But the ultimate aim is to train the system using digital video, with minimal human involvement.

"I think this will extrapolate naturally to video," Glass says.To build their system, the researchers used neural networks, machine-learning systems that approximately mimic the structure of the brain. Neural networks are composed of processing nodes that, like individual neurons, are capable of only very simple computations but are connected to each other in dense networks. Data is fed to a network's input nodes, which modify it and feed it to other nodes, which modify it and feed it to still other nodes, and so on. When a neural network is being trained, it constantly modifies the operations executed by its nodes in order to improve its performance on a specified task. The researchers' network is, in effect, two separate networks: one that takes images as input and one that takes spectrograms, which represent audio signals as changes of amplitude, over time, in their component frequencies. The output of the top layer of each network is a 1,024-dimensional vector -- a sequence of 1,024 numbers.

The final node in the network takes the dot product of the two vectors. That is, it multiplies the corresponding terms in the vectors together and adds them all up to produce a single number. During training, the networks had to try to maximize the dot product when the audio signal corresponded to an image and minimize it when it didn't. For every spectrogram that the researchers' system analyzes, it can identify the points at which the dot-product peaks. In experiments, those peaks reliably picked out words that provided accurate image labels -- "baseball," for instance, in a photo of a baseball pitcher in action, or "grassy" and "field" for an image of a grassy field.

In ongoing work, the researchers have refined the system so that it can pick out spectrograms of individual words and identify just those regions of an image that correspond to them.

Source: Materials provided by Massachusetts Institute of Technology. Original written by Larry Hardesty.

Author of this post :

Subscribe to:

Comments (Atom)